Nvidia announced its H100 acceleration program for data centers and HPC. This PCIe 5.0 GPU is produced on a TSMC 4N node and features HBM3 memory with up to 3TB/s bandwidth. The Nvidia H100 succeeds the current A100 GPU.

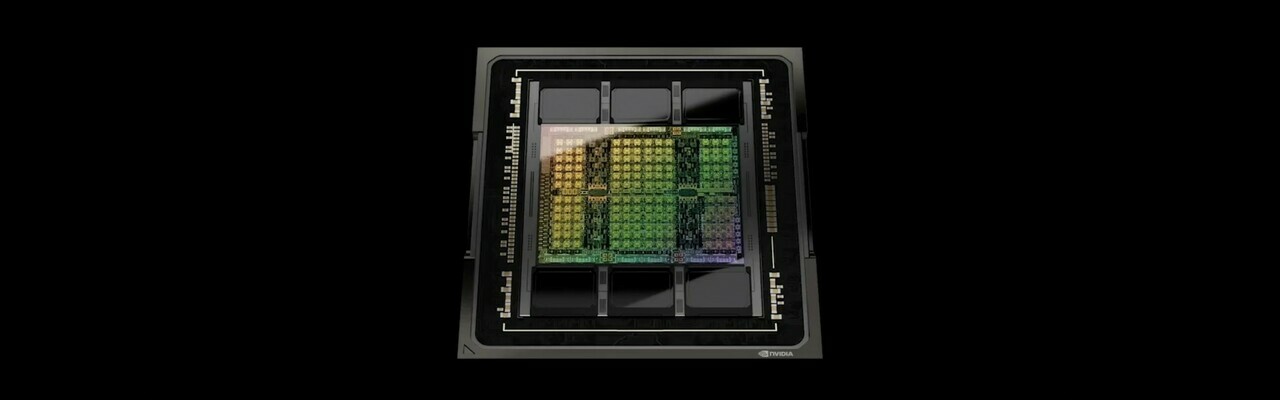

Nvidia H100 GPU It is based on Hopper, a GPU architecture aimed at data centers, HPC and Ampere Follow up in this field. H100 consists of 80 billion transistors and is produced on the TSMC 4N process. This is a modified version of the TSMC N4 process, especially for Nvidia. The Nvidia H100 Again a monolithic chip, just like the A100. Initially, it was rumored that Nvidia would offer a data center GPU with a multi-chip design, consisting of Dies† AMD did just that last year with the Instinct MI200 series.

The current A100 is produced on a modified version of the 7 nm TSMC process and consists of 54.2 billion transistors. Nvidia claims that the H100 provides up to three times more computing power than the A100 in fp16, tf32 and fp64 and six times more in fp8. The H100 GPU has a size of 814 mm². This is slightly smaller than the current GA100, which has who – which– An area of 826 mm².

Nvidia H100 SXM5 (left) and H100 PCIe

HBM3 for SXM5, HBM2e for PCIe variant

Nvidia offers two variants of the H100. The focus appears to be on the SXM5 variant, which has 128 multiprocessor flow For a total of 16896 fp32 CUDA kernels. This card gets 50MB L2 cache and 80GB HBM3 memory on the 5120-bit memory bus, for a maximum memory bandwidth of about 3TB/s. This card gets 50MB L2 cache and 700W tdp. Users can combine multiple H100 SXM GPUs with Nvidia’s NVLink interconnect. According to Nvidia, 4G offers bandwidths of up to 900 Gb/s.

There will also be a PCIe 5.0 x16 variant for more standard servers. This model gets 114 text messages and 14,592 CUDA cores. Furthermore, the PCIe variant gets a 40MB L2 cache, just like the current A100. Remarkably, the PCIe variant still has a slower HBM2e memory, according to Published by Nvidia’s white paper Hopper on Tuesday† At 80 GB, the amount equals the SXM model. The PCIe variant gets a tdp of 350W.

New hopper features: adapter drive, DPX instruction set

Hopper’s architecture itself has also been adapted in comparison to Ampere. Hopper and H100 feature a new switch engine, which combines a new type of Tensor core with a software suite for processing fp8 and fp16 formats for training the switch network. This is a kind of deep learning model.

For cloud computing, the H100 can be divided into up to seven cases† Ampere was already able to do this, but with Hopper they were completely isolated from each other. In addition, Hopper gets a new DPX instruction set dedicated to dynamic programming. Nvidia claims that the H100 performs up to seven times better than the A100 without DPX in this use case.

DGX Systems and SuperPods

Nvidia also offers the DGX H100 system with eight H100 GPUs. With its eight H100 GPUs, this system has 640GB of HBM3 memory with a total bandwidth of 24TB/s. Users can integrate up to 32 DGX systems via NVLink connections. Nvidia calls it the DGX SuperPod. Such a 32-node system should offer massive computing power, Nvidia claims. This indicates fp8 computing power. The company is building an EOS supercomputer itself, consisting of 18 DGX SuperPods with a total of 4,608 H100 GPU.

Nvidia has yet to announce the cost of the H100 GPU. It is also not yet clear what the cost of the H100 DGX systems or the DGX H100 SuperPods will be. Hopper is also not expected to be used in consumer GPUs. Later this year, it was reported that Nvidia will introduce its own Lovelace architecture for the new GeForce RTX graphics cards.

| Nvidia Hopper along with previous Nvidia HPC GPUs | |||

|---|---|---|---|

| building | Huber | Ampere | Volta |

| GPU | H100, TSMC 4 nm | GA100, TSMC 7 nm | GV100, TSMC 12 nm |

| surface die | 814 mm² | 826 mm² | 815 mm² |

| transistors | 80 billion | 54 billion | 21.1 billion |

| CUDA cores (fp32) | SXM: 16896 PCIe slot: 14.592 |

6912 | 5120 |

| tensor cores | SXM: 528 PCIe: 456 |

432 | 640 |

| memory | SXM: 80 GB HBM3 PCIe: 80 GB HBM2e |

40 GB / 80 GB HBM2e | 16 GB / 32 GB HBM2.0 |

| FP32 . conveyor | SXM: 60 flops PCIe: 48Tflops |

19.5 flops | 15.7 flops |

| FP64 . Vector | SXM: 30 flops PCIe: 24Tflops |

9.7 flops | 7.8 flops |

| FP16 . Tensioner | SXM: 1000Tflops PCIe: 800Tflops |

312 flops | 125 Flups |

| TF32 tensor | SXM: 500flups PCIe: 400Tflops |

156 flop | Unavailable |

| FP64 . Tensioner | SXM: 60 flops PCIe: 48Tflops |

19.5 flops | Unavailable |

| INT8 . motor | SXM: 2000 peaks PCIe: 1600pcs |

624 peaks | Unavailable |

| Tdp | up to 700 watts | up to 400 watts | up to 300 watts |

| form factor | SXM5 / PCIe 5.0 | SXM4 / PCIe 4.0 | SXM2 / PCIe 3.0 |